Hello everyone! Starting this week, I am going to summarize my notes in a weekly review post. Here are five machine learning projects / resources / research papers / softwares that I find interesting to explore this week:

Geometric Foundations of Deep Learning [website] [blog post][paper][talk]

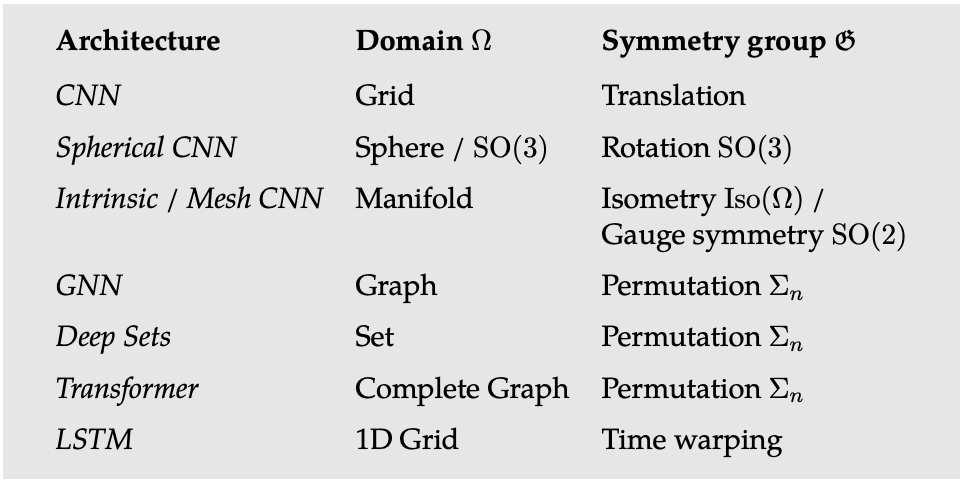

This paper outlines geometric unification of a broad class of machine learning problem, providing a common mathematical framework to derive the most successful neural network architectures such as CNNs, RNNs, GNNs, and Transformers. The work is motivated by Felix Klein’s Erlangen Programme which approaches geometry as the study of invariants.

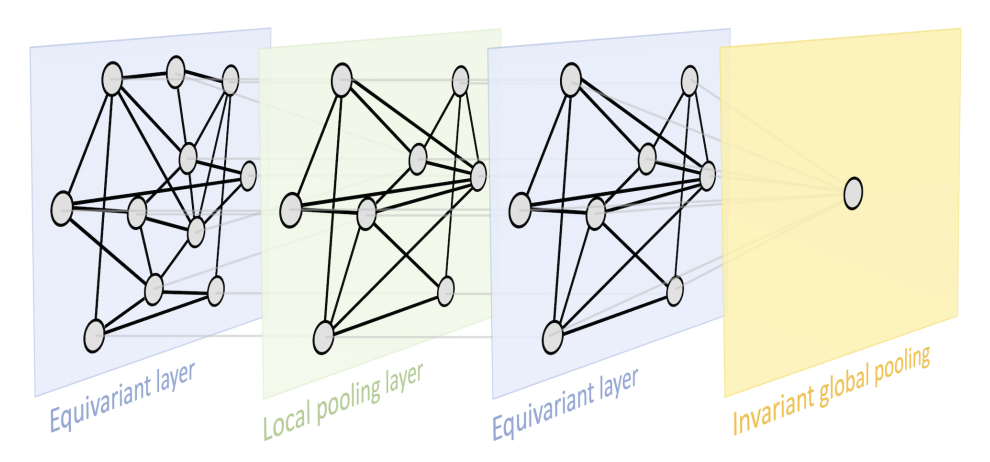

In this light, the authors study symmetries, a certain type of transformation that preserves an object or a structure or a system, and show a general blueprint of Geometric Deep Learning which typically consists of a sequence of equivariant layers, followed by an invariant global polling.

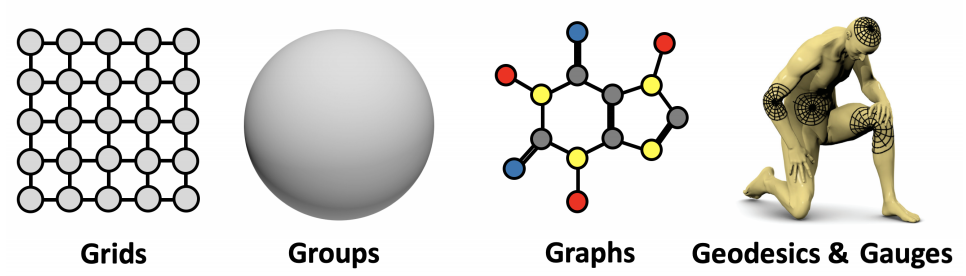

The general blueprint can be applied to different types of geometric domains such grids, groups (global symmetry transformations in homogeneous space), graphs, geodesics (metric structures on manifolds), and gauges (local reference frames defined on tangent and vector bundles).

Explainable AI Cheat Sheet [website]

Jay Alammar creates a cheat sheet for Explainable AI. As more and more machine learning models being deployed in mission critical and high-stake applications such as medical diagnosis, it is important to ensure that the models make decision for the right reason. Jay categorizes explainable AI into five key categories:

- Interpretable models by design such as KNN, linear models, logistic regression

- Model agnostic methods, for example: SHAP, LIME, and pertubation

- Model specific methods, for example: using attention, gradient saliency, and integrated gradients

- Example based methods (to uncover insight about a model) using adversarial examples, counterfactual explanations, and influence functions.

- Neural representation methods: feature visualization, activation maximization, SVCCA, TCAV, and probes.

Check the website for the links to relevant papers on each key categories.

MOOC for getting started with scikit-learn [website][github repo]

This MOOC is developed by scikit-learn core developers. It offers an in-depth introduction to predictive modeling using scikit-learn. The course covers the whole pipeline of predictive modeling including data exploration, modeling (using linear model, decision tree, and ensemble models), hyperparameters tuning, and model evaluation. Highly recommended for beginners!

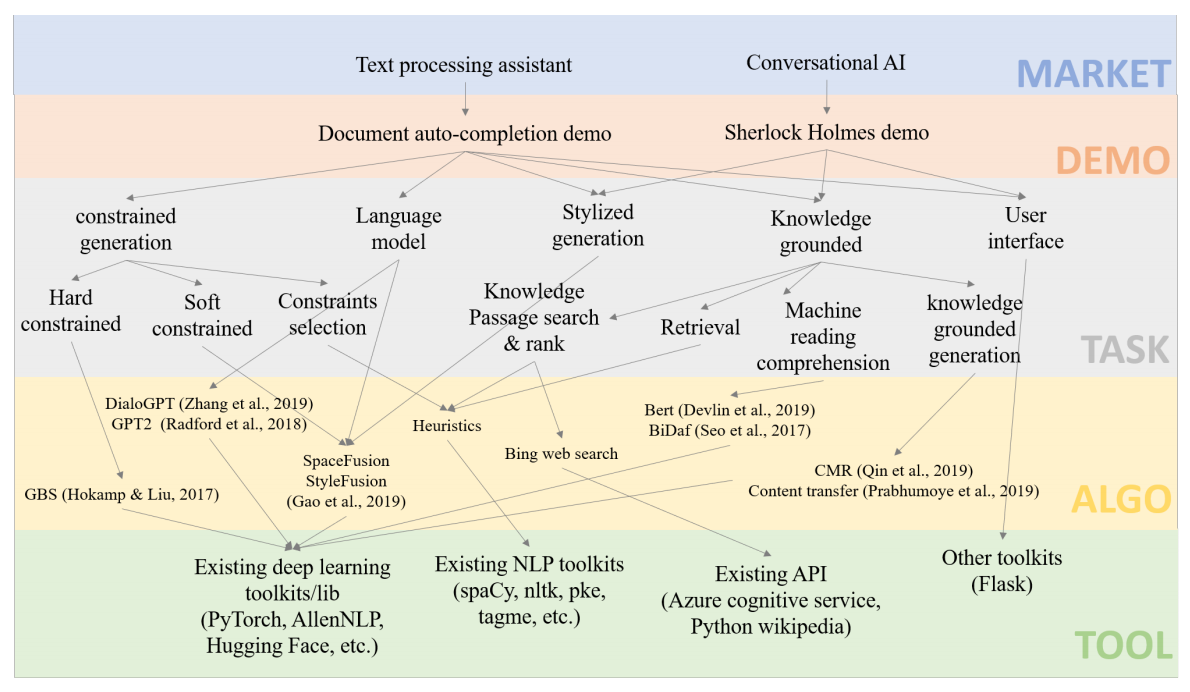

MixingBoard from Microsoft Research [paper][github]

MixingBoard is an open-source platform for quickly building knowledge grounded stylized text generation demos, unifying text generation algorithms in a shared codebase. It also provides CLI, web, and RESTful API interface. The platform has several modules to build a text processing assistant and conversational AI demos. Each module tackles a specific task needed to build the demos such as conditioned text generation, stylized generation, knowledge grounded generation, and constrained generation.

GPT2, DialoGPT, and SpaceFusion can be utilized for conditioned text generation. StyleFusion enables stylized generation via latent interpolation using soft-edit and soft-retrieval strategy. For knowledge grounded generation, it combines knowledge passage retrieval, machine reading comprehension using BERT, content transfer, and knowledge grounded response generation. Finally, hard or soft constraint can be used for constrained generation during decoding stage to encourage the generated texts contain the desired phrases.

The Web Conference 2021 Best Paper: Towards Facilitating Empathic Conversations in Online Mental Health Support: A Reinforcement Learning Approach. [paper][project page][talk]

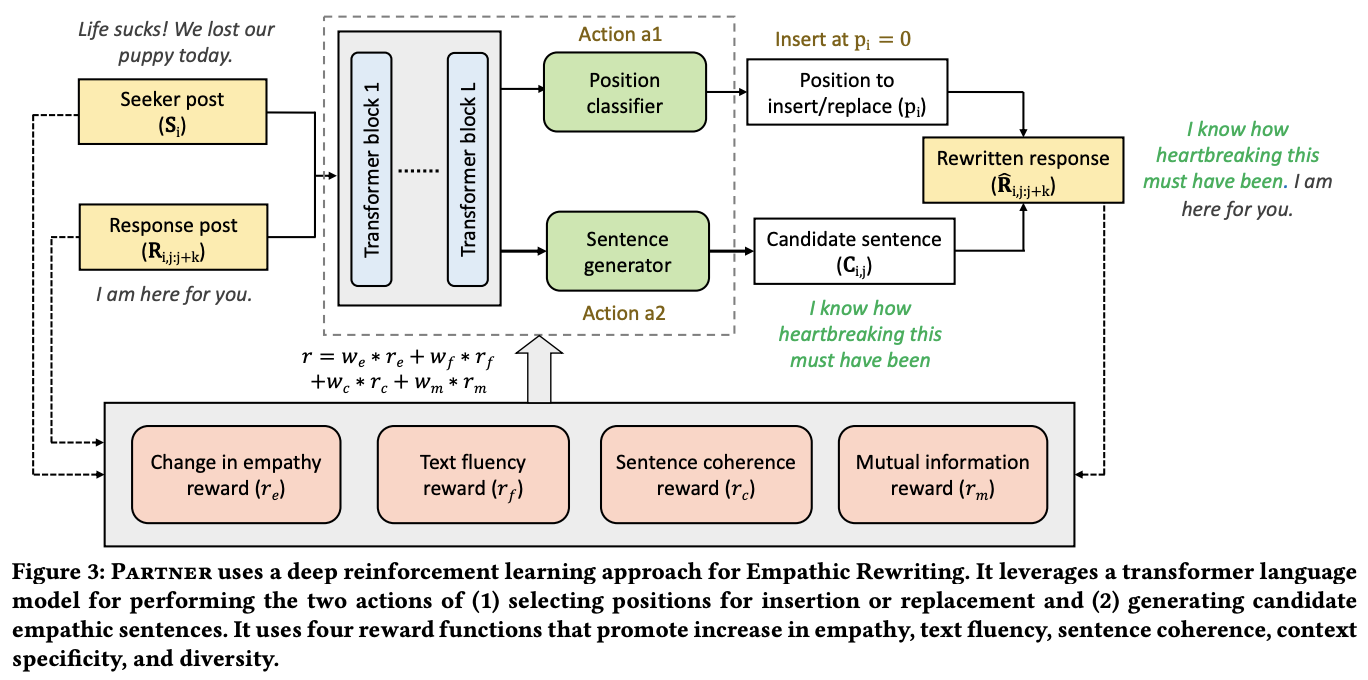

The best paper of the Web Conference 2021 addresses an important mental health care. The authors present a great application of text rewriting, transforming low-empathy conversational posts to higher empathy. The task facilitates an empathic conversation which rarely expressed in online mental health support. A reinforcement learning agent, PARTNER, is developed to perform sentence-level edits for more empathic conversational posts.

PARTNER observes seeker and response posts, and performs two actions:

- Determine a position in the response span for insertion or replacement.

- Generate candidate empathic sentences.

It uses four reward functions aim to increase empathy, maintain text fluency, sentence coherence, context specificity, and diversity.

Hope you enjoy this post. See you next week!